Social media moderators can’t be stuck with the job forever. Eventually, the courts may need to decide.

The saga of the Twitter Files continues to unfold on Capitol Hill — to the unabashed delight of conservatives and the utter disdain of liberals. While mainstream press coverage has been spotty and speculative — at best — the episode, “billionaire buys company, exposes internal communications, a circus ensues — will probably go down in history as one of the most interesting events of this decade.

After all, this sort of behind-the-scenes look at fortune 500 corporate goings-on is highly unusual, if not unprecedented. Elon Musk's decision to buy Twitter and subsequently air all its old dirty laundry flies in the face of every free market principle espoused by capitalism and democracy since the Industrial Revolution.

The new owner of a company — any company, be it a multi-billion-dollar social media bet or a hot dog stand — usually wants to preserve that company’s reputation and profitability at all costs. Else, why buy it?

Not so Elon Musk.

Buying such a huge company, then blowing it up, is rare enough for an honorable mention in the history books, surely. But as surprising as the act itself was, one of the most central revelations of the Twitter Files was almost anti-climactic in its simplicity.

More than anything, the Twitter Files revealed that the subject of censorship, or as it's called in Silicon Valley “content moderation”, is a great cosmic black hole fraught with conflicting moral conundrums, catch-22s, and Constitutional quicksand.

Coming as a surprise to absolutely no one, the internal emails of Twitter execs tasked with choosing what content social media platform users get to see revealed an inundation of moderation demands and objections to censorship. Questions about liability for illegal content posted by platform users flew equally fast and furious in recent years.

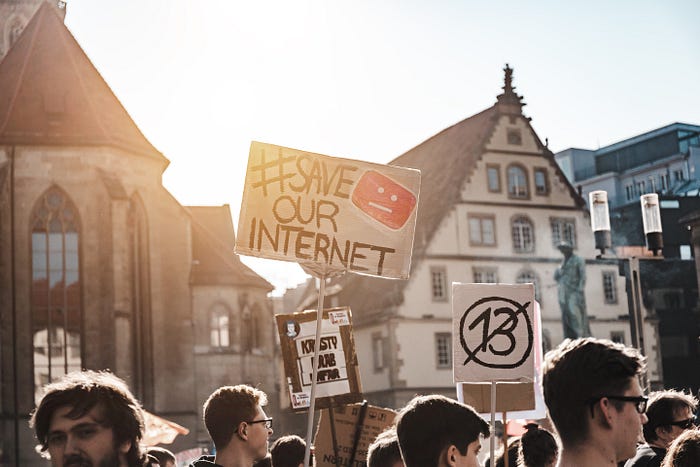

These are industry-wide issues social media platforms are facing in other countries as well. Twitter isn’t only answering questions about censorship on Capitol Hill. Increasingly, around the world, countries are turning to the courts to ultimately make decisions about content moderation.

“Russia threatens to block Twitter if site doesn’t remove alleged banned content,” reported the Associated Press on March 16, 2021.

“Twitter to India court: Overturn gov’t orders to remove content,” wrote Al Jazeera on July 6, 2022, noting that, “The US company’s attempt to get a judicial review of some Indian gov’t orders is part of a growing confrontation.”

“Twitter sued over antisemitic posts left online,” reported the BBC on January 25, 2023.

“Facebook and Twitter failed to remove nearly 90% of Islamaphobic posts flagged to them,” according to a report covered by The Independent in April 2022. “Many of these posts reportedly had offensive hashtags.”

“Australian Muslim group lodges complaint against Twitter for failing to remove ‘hateful’ content,” reported The Guardian on June 26, 2022. “Complaint to Queensland Human Rights Commission accuses platform of not taking action against posts that ‘vilify’ Muslims.”

Both Twitter and Facebook failed to properly moderate videos users uploaded of the mass murder of 51 attendees of a mosque in Christchurch, New Zealand in 2019.

“The Christchurch Attacks: Livestream Terror in the Viral Video Age,” is now a subject of study for cadets at West Point.

All this illustrates that the questions at the heart of censorship are perhaps far too difficult, important, and nuanced to leave to the mercies of tech media content moderators.

Do social media platforms have a right — and a responsibility — to moderate content deemed objectionable?

If for the sake of content moderators alone, regulators may need to accept this responsibility.

Since “objectionable” isn’t the same as “illegal”, the ultimate solution may lie in the law.

“Twitter faces lawsuit in Germany for failure to remove anti-Semitic content,” reported EURACTIV on January 25, 2023.

This is a particular case because Germany has strict laws and regulations aimed at combatting anti-Semitism, which is a criminal offense in the country.

The main law against anti-Semitism is the General Equal Treatment Act (Allgemeines Gleichbehandlungsgesetz or AGG), which prohibits discrimination on the grounds of religion, ethnicity, or other characteristics. This law covers a range of situations, including employment, education, housing, and services.

In addition, Germany has specific laws that criminalize anti-Semitic speech, incitement to hatred, and Holocaust denial.

- The Criminal Code (Strafgesetzbuch or StGB): Section 130 of the StGB prohibits incitement to hatred against certain groups, including Jewish people. This includes both verbal and written expression, such as hate speech, public statements, and the dissemination of propaganda.

- The Network Enforcement Act (Netzwerkdurchsetzungsgesetz or NetzDG): This law requires social media platforms to remove hate speech, including anti-Semitic content, within 24 hours of receiving a complaint.

The answer to the flaws revealed by the Twitter files may not be more or less regulation of Big Tech. Better laws, greater transparency in their application, and more accountability for social media platforms might be a better course of action.

(contributing writer, Brooke Bell)